MasterpieceVR, Voxel Compression

- Chris Leu

- Jan 4, 2024

- 2 min read

Updated: Mar 20, 2025

The very first major task I as assigned at MasterpieceVR was overhauling how they handle voxel data. When I arrived, there were symptomatic issues with hitting memory limits, unstable performance, render bugs and even hard crashes. Moreover, the system was authored to only ever work with a single block of voxel data, and as you might expect, supporting multiple layers of voxel data was a critical deliverable.

The biggest bottleneck was trying to pull the massive chunk of data from the GPU, a 2048^3, or about a billion voxels, voxel texture was really not feasible to pull onto CPU, which was really hurting save-load, undo-redo, and overall performance.

In my research, I found that the major talking point was Sparse Voxel Octrees, which would have provided fantastic compression ratios, but the largest concern was their substantially limited in read-write speed, which was critical to the product, for real-time VR applications even an otherwise mild dip in framerate has a tangible negative effect on the user's experience, and after some discussion the R&D lead and I decided to shelve the SVO overhaul.

Another well-known style of compressing voxel data is referred to as Run-Length Encoding, which I dove into, and each detail was more and more favorable. The research detailed its strengths matched our focus, read-write speed, de/compression time, and I was enthusiastic about this option, and drafted a plain-language pitch document to summarize my findings, and began prototyping a version that would run on the GPU.

Pretty much all of the existing research I could find was discussing RLE compression on the CPU, which cannot exploit parallel computing, while I was deliberately doing this compression on the GPU. This lead to some uncertainty in the implementation, but proved to be a massive boon to the performance of this system in the end. In short, I drafted a pseudocode document, detailing how the system would be adjusted to exploit the GPU's strengths.

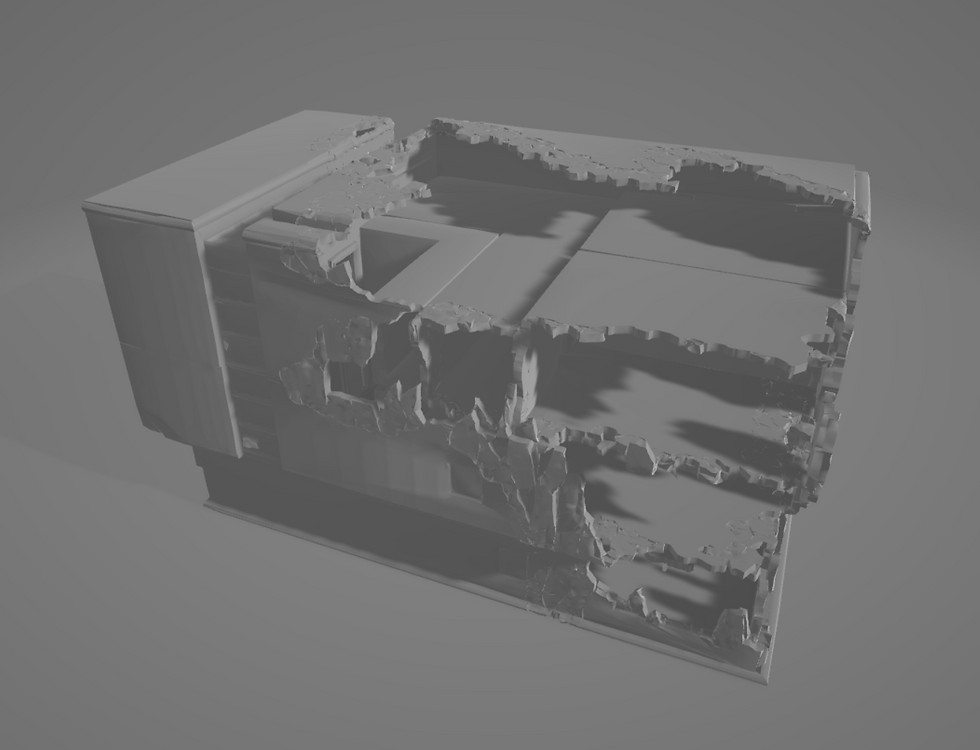

Implementation began, I was able to readily compress the voxel data in this way, getting fantastic results on our average use case, since our voxel data was almost exclusively the minimum or maximum value with only a thin band of nonuniform values, making our system almost an ideal case for Run Length Encoding, compression to the excess of 95% was common with user generated data. Debug testing found that even as we approached an adverse case for RLE (such as the Menger sponge) the performance was still measurably superior to the baseline.

Further optimization was discussed, such as Hoffman Encoding, but with the current functionality so improved over baseline, we decided to focus on other features.

Moving forward, RLE compression was a proven tactic, and I later also implemented it for capturing texture changes for undo/redo, an otherwise incredibly memory intensive task made easy by using the techniques I mastered in this implementation.

Comments