MasterpieceVR, Texture Painting

- Chris Leu

- Jan 8, 2024

- 4 min read

Updated: Feb 13, 2025

By 2020, MasterpieceVR had robust mesh editing toolbox, but the company objective was to capture the full model-paint-animate pipeline, which meant allowing a user to intuitively paint their model in VR. At the time it simply hadn't been done, and texture painting in VR was limited to some competitors which didn't support modeling or animation, or all-in-one suites like Blender which didn't support virtual reality in any way.

The software which is now known as Medium by Adobe happened to be in ongoing development to compete in this market, but it was far from feature complete.

Our goal was real-time VR texture painting on fully featured texture targets, with brushes, masking, and a wealth of material options.

At a glance, that means we would need to sample and splat on many textures on every frame, without any room for stalling or memory issues.

Over the next four years, I was the lead for this feature at MasterpieceVR, cultivating the texture tools from a novel tech demo to a fully featured suite shipped to consumers.

In its infancy, it was a just way to splat PNG decals on a trivial pre-baked mesh, but by the end it sported a complete material editing suite with full support for a variety of tools including paint, erase, decal, and fill.

It is unambiguously the project I'm most proud of.

In the early 2020 the R&D Lead met with me to investigate implementing a barebones unity project for projecting color data onto a mesh. In short order, we had a simple demo with prebaked meshes, lacking most of the features and options we would be aiming to implement in the future, but proving it was feasible to do with good framerates in VR.

Over the next several months, we rapidly iterated over several revisions, adjusting the functionality, visuals, and experience as a whole, before I moved the system into the Masterpiece Creator application.

Notable additions at this point include sliders to adjust the brush parameters, a stamping decal tool, the erase tool, and three texture targets, allowing the metal and gloss textures to be edited in addition to color.

We met some early roadblocks with maintaining framerate for high-quality collision against dynamic meshes, optimizing the related shaders for the same reason. A major breakthrough was had to support real-time preview, and I implemented support for symmetry/arbitrary brushes.

At this point, in mid 2020, the texture painting system had three major phases: setup, projection, and cleanup.

In the setup phase, the selected mesh was first check for valid UVs, opening a UI panel allowing the user to apply cloud-based auto-UV if none were detected; a texture which lets the system convert from world position to mesh position is baked, and a high-quality collider is enabled.

Once in the projection phase, the tool brush begins ray-casting to the collider, and when a valid intersection is detected, the position & orientation is recorded. That data is passed to a shader which creates world-space bounds, using the baked texture to compare the expected and real positions. Valid positions will sample the prepared material, and apply that to the targeted textures, optionally blending with brush textures.

Finally, we reach the cleanup step, at this stage in development its only responsible for removing the preview if the tool isn't projecting onto the mesh, but in the future it handles undo/redo capture, and projection gizmos.

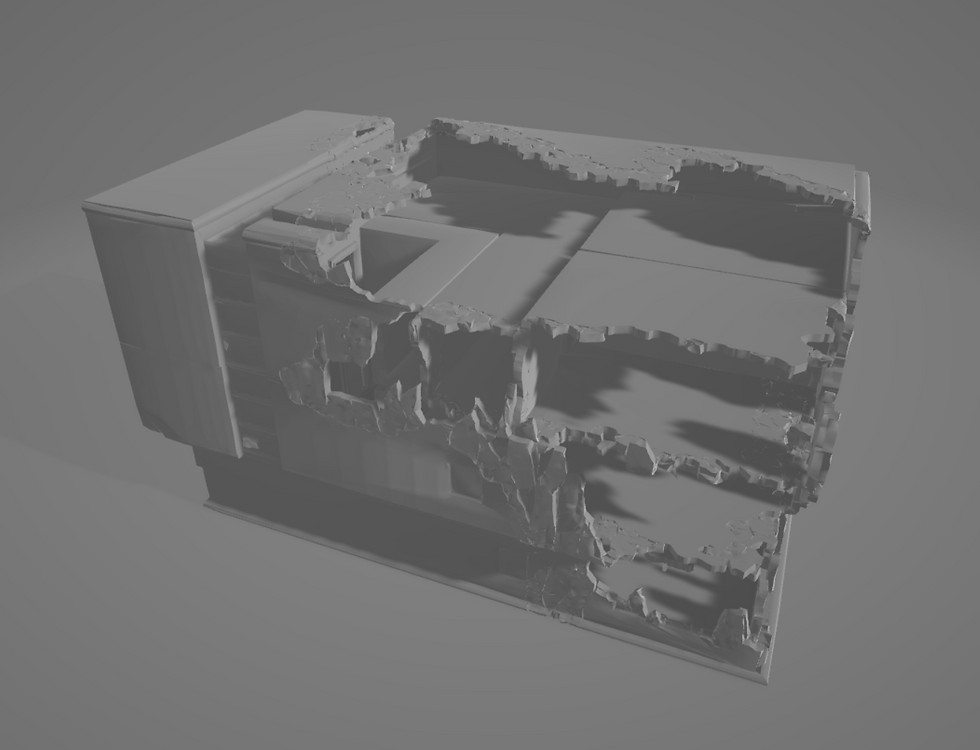

The Texture tool's first in-app implementation.

The largest issue at this point was solving how we wanted to blend the projected textures, while box projection was appropriate for decals, it left very visible seams from full-size textures, which are unacceptable for a polished product.

I reached for a methodology I was familiar with, triplanar projection, which could extend the existing systems, generating a world normals texture and sampling it in order to smoothly blend textures across organic shapes.

To summarize several months of development, the triplanar blending was so well-received that it became the default method of texture painting. I worked closely with the UI/UX team to create a custom in-VR material editing suite, which substantially expanded the usability of texture painting.

As Masterpiece Creator spun up for full release we prepared simple demo footage of each mode, just scratching the surface of what is possible with the texture painting system and fully-featured material editing suite.

At this point in early 2021, Masterpiece X was publicly announced, featuring Texture Painting as an advertised mode! Completing the Model-Texture-Rig-Skin-Animate pipeline, we stood alone as the only VR software able to go from concept to polished product entirely in-headset. Moving from Desktop hardware to untethered VR headsets had its share of hardware limitations, and texture painting had to specialize its systems for the Quest, especially with memory management.

We prepared a collection of PBR materials for the user, and investigated ways of allowing for more. Experimentation with Unity's Addressables system found issues with file size and download rate, while AWS-hosted content proved to be most efficient for our purposes.

I continued to lead the texture painting mode as we shifted to a focus on optimization for mobile hardware from a powerful flexible toolset to a focused, optimized experience. By the title launch in 2022, texture painting was feature complete, running at competitive framerates on the Meta Quest 2.

Comments